LEVEL Residency Lab Week 1

As I begin my second week in residency at the LEVEL Centre’s Residency Lab, I wanted to reflect back on the previous week and consider the learning journey, both in progressing technical skills in immersive technology and using this technology when working with participants.

For this residency, my aim is to explore participatory practice through our conscious experiences of online and offline immersive creative spaces. This exploration, aligned with phenomenological philosophies and the basic intentional structure of consciousness, will delve into our first-person perspectives of collaborative physical and online spaces, and explore our individual conscious experiences and their relationship to others. Something I feel is informative to co-creation practices in immersive spaces.

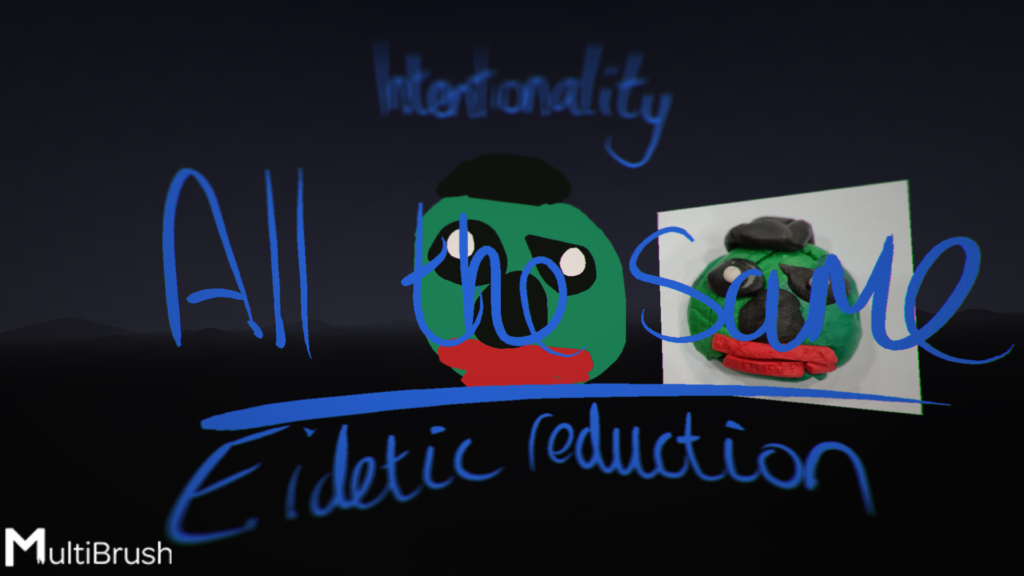

The week was both technically challenging, as well as eye opening in relation to adapting to participants needs. Technically, my aim is to produce an immersive space for participants to explore through projection and VR, as well as produce an immersive note book accessible online. These areas of exploration were both designed to test the creative use of VR application MultiBrush (to create accessible methods of participant made content for immersive spaces), as well as test out how to present and create in these spaces.

Technically, a lot was learned in relation to translating MultiBursh creations to game engine Unity. While MultiBrush is a Unity created application, it’s brush shaders don’t all translate over to the engine. This resulted in using alternative shaders, as well as additional steps in displaying scanned and image based assets (e.g. lighting requirements). While these did not look the same, I can’t help consider these to still be part of the same phenomena and therefore not detracting from their purpose.

The participatory space used the Mozilla Hubs platform. This provided a great immersive space for participants to see and explore their work, both in VR and room projection. However, Hubs presented limitations in adding participant made content through development platform Spoke. Spoke appears to be limited in relation to adding scanned 3D models (even with reductions made where possible) and images. A work around is loading these items in the live space and positioning and pinning in place as an audience member (something that works much better in VR than desktop). While this isn’t ideal, it does make the installation of work far more participatory and co-produced. The problem with this is that exhibitions become temporary and open to work being disrupted and changed. On the plus side, it means that anyone visiting an exhibition in Hubs could change it, adding to the co-creative nature of the work and therefore part of the phenomenological experience. I can’t help but imagine what this would be like in a physical gallery exhibition.

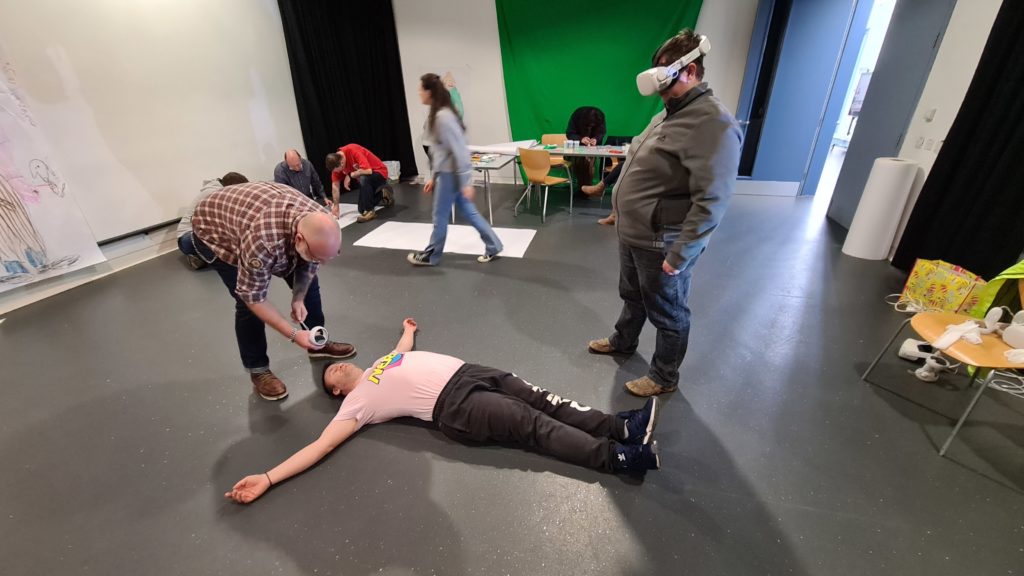

Working with participants was an eye opening aspect of this work. This is where working in the context of phenomenology really came into play. Not all participants wanted to engage in using VR and those who did all had very different experiences as individuals. However, as a whole all were part of the creating and exploration process. Each contributing using the tools most comfortable to them, the conversations around the technology and being immersed in the physical and digital participatory space. Those who didn’t want to use VR created work through drawing and model making. As these works were created, they were digitally scanned and photographed and added to the digital space, building the immersion together.

An exciting aspect of working with these participants was the inclusion of a participant who had both visual and hearing impairments. Visually, VR still provides (in part) for an immersive experience through special sound and haptic feedback. This participant had tunnel vision, which still allowed for a virtual experience, as VR still has limited peripheral vision and therefore their experience would not have been too different from a sighted participant. What was really exciting was using the Oculus Quest’s Space Sense feature. Still regarded as experimental, this feature allows for users to see objects and movement in front of them through a colourful cartoon like outline. This feature therefore allowed for the participant’s BSL Translator to be able to communicate with them while using the device. The participants happy response to this was really wonderful and offers exciting possibilities for future accessibility in VR. Current experimental features also include the use of hand tracking to interact in virtual spaces, removing the need for hand controllers and therefore freeing the participant to be able to communicate back. Something I hope to see in the future of what is now considered the ‘Metaverse’.

You can see more outcomes from this residency over on my LEVEL Residency Lab page.